MediaPipe + OpenCV五分钟搞定手势识别

描述

MediaPipe介绍

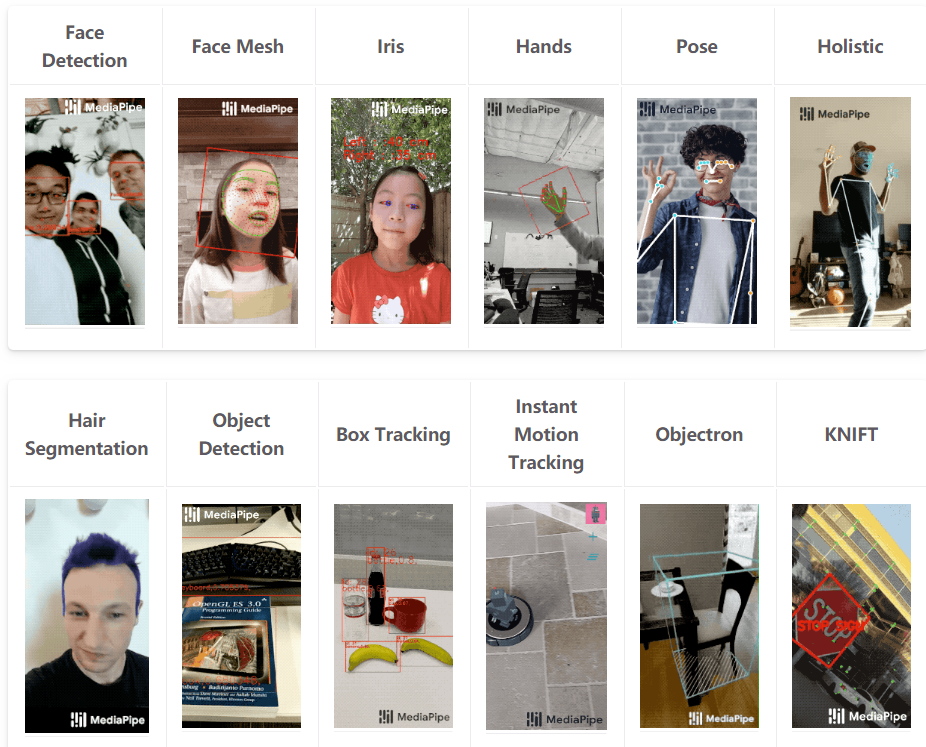

这个是真的,首先需要从Google在2020年发布的mediapipe开发包说起,这个开发包集成了人脸、眼睛、虹膜、手势、姿态等各种landmark检测与跟踪算法。

https://google.github.io/mediapipe/

请看下图比较详细

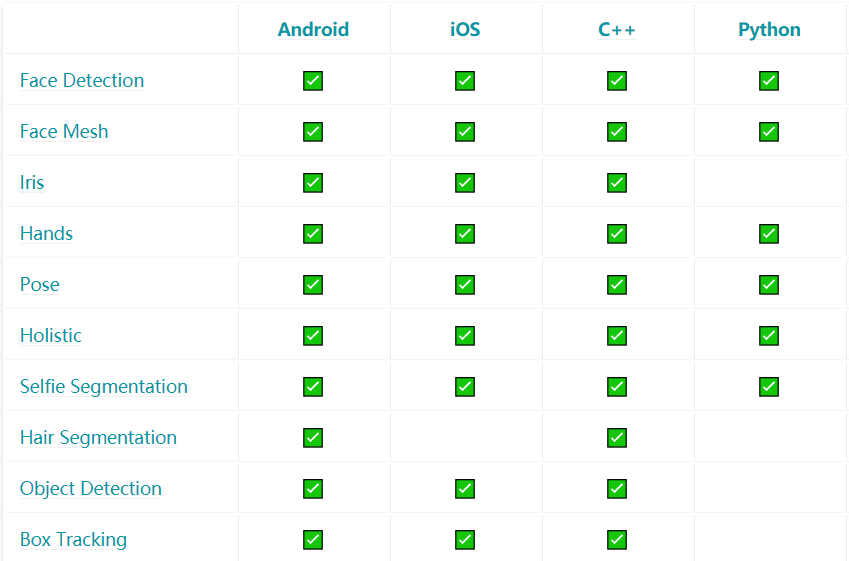

是个不折不扣的现实增强的宝藏工具包,特别实用!支持的平台跟语言也非常的丰富,图示如下:

只说一遍,感觉要逆天了,依赖库只有一个就是opencv,python版本的安装特别简单,直接运行下面的命令行:

pip install mediapipe

手势landmark检测

直接运行官方提供的Python演示程序,需要稍微修改一下,因为版本更新了,演示程序有点问题,改完之后执行运行视频测试,完美get到手势landmark关键点:

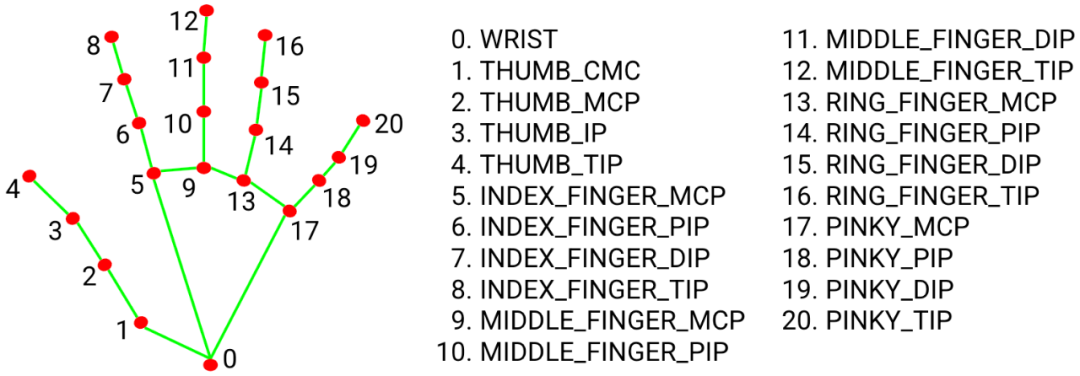

手势landmark的关键点编号与解释如下:

修改后的代码如下:

import cv2

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_hands = mp.solutions.hands

# For webcam input:

cap = cv2.VideoCapture(0)

with mp_hands.Hands(

min_detection_confidence=0.5,

min_tracking_confidence=0.5) as hands:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = hands.process(image)

# Draw the hand annotations on the image.

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

mp_drawing.draw_landmarks(

image,

hand_landmarks,

mp_hands.HAND_CONNECTIONS)

cv2.imwrite('D:/result.png', cv2.flip(image, 1))

# Flip the image horizontally for a selfie-view display.

cv2.imshow('MediaPipe Hands', cv2.flip(image, 1))

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

手势识别

基于最简单的图象分类,收集了几百张图象,做了一个简单的迁移学习,实现了三种手势分类,运行请看视频:

声明:本文内容及配图由入驻作者撰写或者入驻合作网站授权转载。文章观点仅代表作者本人,不代表电子发烧友网立场。文章及其配图仅供工程师学习之用,如有内容侵权或者其他违规问题,请联系本站处理。

举报投诉

-

基于毫米波雷达的手势识别算法2024-06-05 0

-

10分钟搞定pld2013-08-30 0

-

红外手势识别方案 红外手势感应模块 红外识别红外手势识别2014-08-27 0

-

【UT4418申请】手势识别系统2015-09-23 0

-

五分钟学会CPLD资料2016-11-15 0

-

【NanoPi Duo开发板试用申请】基于nanopi的手势识别2017-10-11 0

-

手势识别装置介绍2021-08-06 0

-

手势识别控制器制作2021-09-07 0

-

五分钟读懂WiFi基础知识2021-12-01 0

-

笔记本潜在的五大危险(五分钟就搞定)2010-01-23 349

-

手势识别系统的程序和资料说明2019-04-28 1019

-

opencv 轮廓放大_OpenCV开发笔记(六十六):红胖子8分钟带你总结形态学操作-膨胀、腐蚀、开运算、闭运算、梯2021-11-24 478

-

手势识别技术及其应用2023-06-14 2038

-

车载手势识别技术的原理及其应用2023-06-27 1321

-

如何用OpenCV进行手势识别--基于米尔全志T527开发板2024-12-13 698

全部0条评论

快来发表一下你的评论吧 !